Someone asked me the other day why the Bloomberg terminal design colors are amber on black.

I knew the answer, of course, because I worked there for three decades. But I wanted to confirm it, so I put the question to Perplexity AI, a large language model.

It’s good to trust; better to verify.

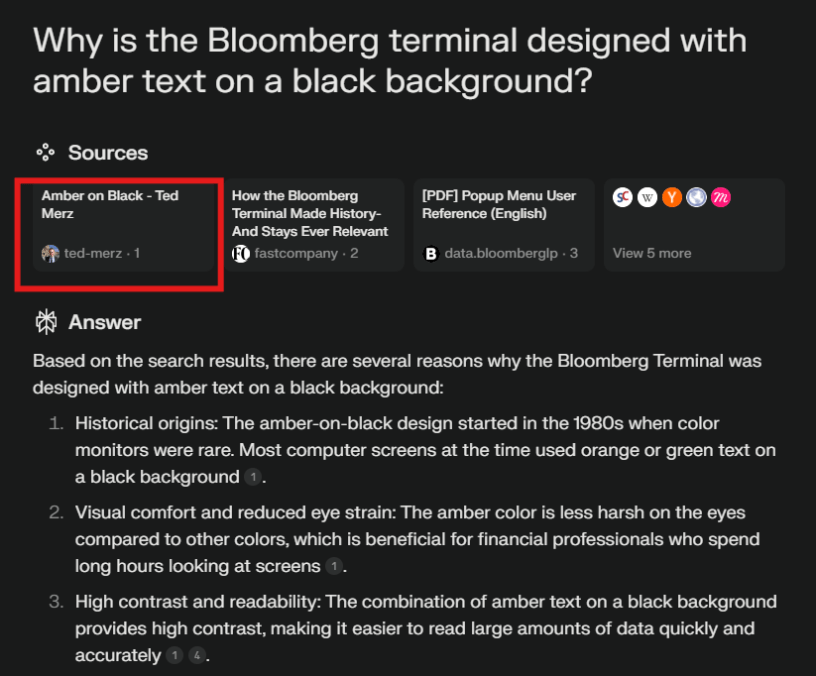

Perplexity came back with eight solid explanations, including the fact that amber and green were default computer monitor colors when Bloomberg started in the early 1980s.

One of Perplexity’s best features is that it provides annotations to tell you the source of the information.

When I looked closer, however, I realized that five of the eight answers were sourced to a blog I wrote.

I had forgotten I wrote the piece three years ago.

It was a bit of a shock to realize I was the source for the question I was asking.

People worry a lot about generative AI spitting out falsehoods, or hallucinations.

A related concern is that answers rely on unvetted sources.

I am just a random guy writing on the Internet.

But I was ranked as the top authority for the question I asked, seven sources above the Wikipedia entry for the Bloomberg Terminal which I used as source material.

There has always been a problem with “fake” information on the internet. People who Google questions tend to check the source to assess the credibility of the information.

Some large language models provide answers without citing sources, making it harder to judge veracity.

This may become a bigger problem as more websites and applications leverage LLMs to generate answers.

If you don’t believe me, just ask Perplexity.