CEOs say their employees aren’t using ChatGPT and other AI tools at work.

Academic studies show the opposite.

One of the paradoxes of generative AI is that two years after it was unveiled, many companies severely restrict its usage. They have valid concerns about errors and hallucinations.

Employees, meanwhile, are covertly using text and image generation to get more done. According to recent research, 40% of Americans have used AI. One quarter of workers use it weekly and one in nine daily.

Ethan Mollick, an associate professor at Wharton and a prolific poster on AI, wrote in his blog that employees have no incentive to tell management. They fear being fired and want to appear to be working hard.

A good place to start to understand what’s happening here is with a story about Elizabeth Laraki.

Laraki is a design partner at Electric Capital who previously worked at Google Maps, YouTube and Facebook. Recently, she sent a headshot to organizers of a conference where was slated to speak.

When she saw the photo on the conference site, however, something didn’t seem right.

She looked closer and noticed that in the online photo her blouse had an extra button undone.

According to her post on LinkedIn:

“Was my bra always showing on my profile pic and I’d never noticed? Weird… So I opened my original photo. Nope. No bra showing. I put the two photos side by side and I was like WTF. Someone edited my photo to unbutton my blouse and reveal a made-up hint of a bra or something else underneath. 😒”

She emailed the organizers who promised to get to the bottom of it.

The answer reveals a lot about generative AI and explains why corporate adoption of the tool is going so slowly. It’s a thorny problem and a situation that is going to become more commonplace.

Laraki described what she discovered:

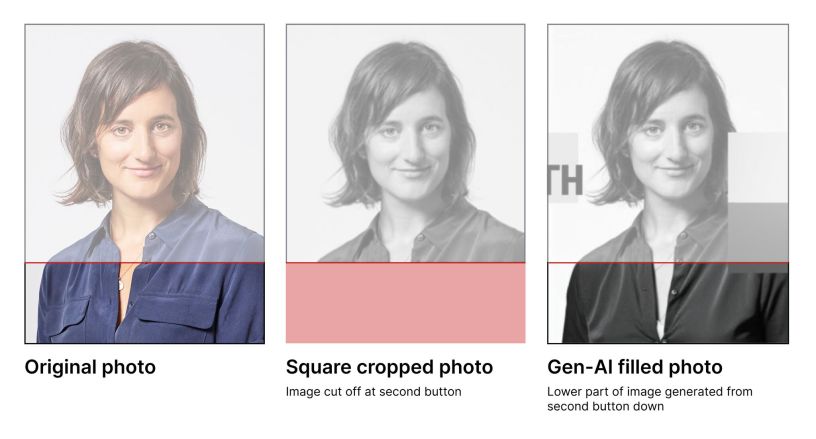

“I had originally sent them a vertical profile photo. They had cropped all speaker photos to be square for their website. The person running their social media didn’t have my original image and she grabbed the square, cropped image from their website. She wanted it to be more vertical for the ad, so she used an AI expand image tool to make the photo taller.”

The significant part comes next. Laraki realized that AI training sets – the photos used to develop the patterns recognized by large language models — have a bias.

“AI invented the bottom part of the image…in which it believed that women’s shirts should be unbuttoned further, with some tension around the buttons, revealing a little hint of something underneath. 😳”

Right now, this is a feature of AI, not a bug.

That is something the creators of the large language models are addressing, according to Michael Parekh, who ran internet research for Goldman Sachs for twenty years.

“They know they have to deal with it. But it’s a challenge,” he told me.